Ray Hilborn Says Recent Science Paper Makes Inflated Claim about Human Impacts on Marine Species

— Posted with permission of SEAFOODNEWS.COM. Please do not republish without their permission. —

Copyright © 2015 Seafoodnews.com

Ray says the authors made a mistake in only looking at individual predators and in not considering all predation on a given species. He says that when all predation is considered, the results reverse themselves, and that natural predators take a larger proportion of adult marine fish than humans do.His comment is below:Comment by Ray Hilborn, University of WashingtonThis paper claims that humans have a up to 14 times higher exploitation rate than natural predators. There is a basic flaw in the analysis which diminishes the validity of the conclusions the authors come to. First the calculated predation rate of natural predators will depend on how many predators you look at. Dozens or even hundreds of species may prey upon a given species, most of them taking a trivial fraction of the prey. If you find data only for the most important predators (the ones that take the most of the prey species) you will estimate a high predation rate, but if you find data for all the species that prey upon a species the median will be much much lower.In fact there are hundreds of potential predators for any species, most take none of the prey species, so if you had data for all of them you would say that the average predation rate was nearly zero for natural predators. Thus the more data on predation rate for individual species you can find, and the more you find predation data for trivial predators, the lower you will estimate “average” predation. However, if you look at the predators who take the most of the specific prey the fraction of the prey will be much higher and often more than humans.

The more important question is what is the total predation rate compared to the human exploitation rate? One has to read the Darimont paper carefully to realize they are talking about rates of individual predatory species, not rates of predators as a whole. For instance their abstract says “humans kill adult prey… at much higher median rates than other predators (up to 14 times higher). ” Thus they are comparing the rates of all other predatory species taken one at a time to that of humans. There may be natural predators who have a very high predation rate (higher than humans), but they are masked by the average of other predators with low rates. The clear implication is that we take more adults than do predators. Much of the media coverage interprets their results this way.This is absolutely not true as shown in the analysis below which shows that humans take about ½ as many adult fish as marine predators.

Chart: Ray HilbornTo compare the rates of fishing mortality to rates of natural mortality (almost all of which is from predation), I used the RAM Legacy Stock Assessment Data Base (www.ramlegacy.org) the same data base used by Dairmont et al. to obtain fishing mortality rates. I selected the 223 fish stocks for which we had both natural mortality and human exploitation rates, and plot the distribution of the two in the graph below. We find that fishing mortality on adult fish is on average roughly ½ of the predation rate --- not 14 times higher as the abstract of their paper would leave you to believe. Remember Dairmont were not looking at all of predation, but counting each predator as an individual data point. In aggregate predators take far more adult fish than do humans, but you would not understand that by reading the Dairmont paper.The authors conclude that argument that globally humans are unsustainable predators. This flies in the face of the fact that we have considerable empirical evidence that we can sustainably harvest fish and wildlife populations. The basic key to sustainable harvesting is keeping the fraction exploited at a level that can be sustained in the long term, and adjusting harvest up and down as populations fluctuate. The Food and Agriculture Organization of the United Nations provides the most comprehensive analysis of the status of global fisheries and estimates than about 30% of global fish stocks are overexploited – the other 70% are at levels of abundance that are generally considered sustainable.Many fisheries are evaluated by independent organizations like the Marine Stewardship Council and Monterey Bay Aquarium and classified as “sustainable” yet Dairmont and co-authors suggest that no fisheries are sustainably managed.As an example, sockeye salmon in Bristol Bay Alaska have been sustainably managed for over a century, have been evaluated as sustainable by every independent organization, and the key is limiting harvest so that enough fish reach the spawning grounds to replenish the species. In this case humans take about 2/3 of the returning adult salmon – a much higher fraction than the predators, but it is sustainable and stocks are at record abundance.Darimont and coauthors suggest we need to reduce exploitation pressure by as much as 10 fold. This may be true in some places but in the US we manage fisheries quite successfully. We agree with the authors that management is key to keeping healthy and sustainable populations of fish and wildlife. However, instead of “emulating natural predators” and decreasing human exploitation across the board, we need to work to use our knowledge to expand good management practices to more species and areas of the world.

Subscribe to seafoodnews.com

Letters: Grossman Article on Reasons for Sardine Decline Inaccurate

— Posted with permission of SEAFOODNEWS.COM. Please do not republish without their permission. —

SEAFOODNEWS.COM [Letters] - June 23, 2015Editor’s Note: The following letter from D.B. Pleschner was reviewed and supported by Mike Okoniewski of Pacific Seafoods.To the Editor: I take exception to your statement: "The author of this piece, Elizabeth Grossman, buys into the argument, but in a fair article.”In no way was this “fair” reporting. She selectively quotes (essentially misquotes) both Mike Okoniewski and me (and this after I spent more than an hour with her on the phone, and shared with her the statements of Ray Hilborn, assessment author Kevin Hill and other noted scientists.) She does not balance the article but rather fails to emphasize the NOAA best science in favor of the Demer-Zwolinski paper, published in NAS by NOAA scientists who did not follow protocol for internal review before submitting to NAS (which would have caught many misstatements before they saw print).NOAA’s Alec MacCall later printed a clarification (in essence a rebuttal) in NAS, which pointed out the errors and stated that the conclusions in the Demer paper were “one man’s opinion”.Oceana especially has widely touted that paper, notwithstanding the fact that the SWFSC Center Director also needed to testify before the PFMC twice, stating that the paper’s findings did not represent NOAA’s scientific thinking.After the Oceana brouhaha following the sardine fishery closure, NOAA Assistant Administrator Eileen Sobeck issued a statement. SWFSC Director Cisco Werner wrote to us in response to our request to submit Eileen’s statement to the Yale and Food & Environment Reporting Network to set the record straight:“The statement from the NMFS Assistant Administrator (Eileen Sobeck) was clear about what the agency's best science has put forward regarding the decline in the Pacific Sardine population. Namely, without continued successful recruitment, the population of any spp. will decline - irrespective of imposed management strategies.”It is also important to note that we are working closely with the SWFSC and have worked collaboratively whenever possible.I would greatly appreciate it if you would again post Sobeck’s statement to counter the inaccurate implications and misstatements in Elizabeth Grossman’s piece.Diane Pleschner-SteeleCalifornia Wet Fish Producers AssociationPS: I also informed Elizabeth Grossman when we talked that our coastal waters are now teeming with both sardines and anchovy, which the scientific surveys have been unable to document because the research ships survey offshore and the fish are inshore.Sobeck’s statement follows:Researchers, Managers, and Industry Saw This Coming: Boom-Bust Cycle Is Not a New Scenario for Pacific SardinesA Message from Eileen Sobeck, Head of NOAA FisheriesApri 23, 2015Pacific sardines have a long and storied history in the United States. These pint-size powerhouses of the ocean have been -- on and off -- one of our most abundant fisheries. They support the larger ecosystem as a food source for other marine creatures, and they support a valuable commercial fishery.When conditions are good, this small, highly productive species multiplies quickly. It can also decline sharply at other times, even in the absence of fishing. So it is known for wide swings in its population.Recently, NOAA Fisheries and the Pacific Fishery Management Council received scientific information as a part of the ongoing study and annual assessment of this species. This information showed the sardine population had continued to decline.It was not a surprise. Scientists, the Council, NOAA, and the industry were all aware of the downward trend over the past several years and have been following it carefully. Last week, the Council urged us to close the directed fishery on sardines for the 2015 fishing season. NOAA Fisheries is also closing the fishery now for the remainder of the current fishing season to ensure the quota is not exceeded.While these closures affect the fishing community, they also provide an example of our effective, dynamic fishery management process in action. Sardine fisheries management is designed around the natural variability of the species and its role in the ecosystem as forage for other species. It is driven by science and data, and catch levels are set far below levels needed to prevent overfishing.In addition, a precautionary measure is built into sardine management to stop directed fishing when the population falls below 150,000 metric tons. The 2015 stock assessment resulted in a population estimate of 97,000 metric tons, below the fishing cutoff, thereby triggering the Council action.The sardine population is presently not overfished and overfishing is not occurring. However, the continued lack of recruitment of young fish into the stock in the past few years would have decreased the population, even without fishing pressure. So, these closures were a “controlled landing”. We saw where this stock was heading several years ago and everyone was monitoring the situation closely.This decline is a part of the natural cycle in the marine environment. And if there is a new piece to this puzzle -- such as climate change -- we will continue to work closely with our partners in the scientific and management communities, the industry, and fishermen to address it. Read/Download Elizabeth Grossman's article: Some Scientists and NGO’s Argue West Coast Sardine Closure was too Late

Subscribe to seafoodnews.com

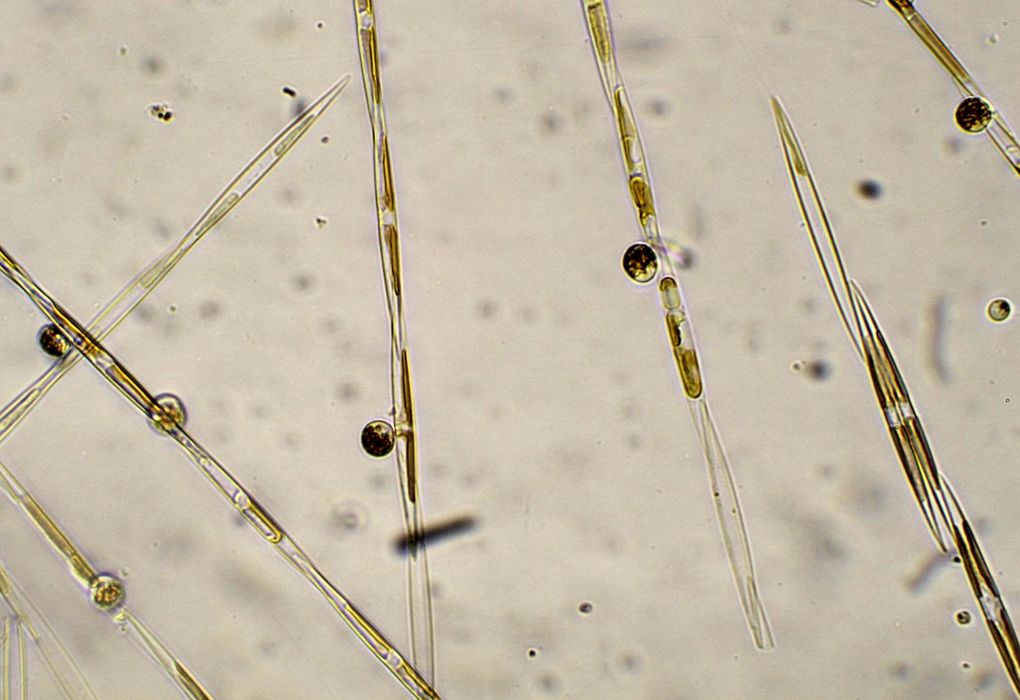

Toxic algae bloom might be largest ever

Scientists onboard a NOAA research vessel are beginning a survey of what could be the largest toxic algae bloom ever recorded off the West Coast.

A team of federal biologists set out from Oregon Monday to survey what could be the largest toxic algae bloom ever recorded off the West Coast.The effects stretch from Central California to British Columbia, and possibly as far north as Alaska. Dangerous levels of the natural toxin domoic acid have shut down recreational and commercial shellfish harvests in Washington, Oregon and California this spring, including the lucrative Dungeness crab fishery off Washington’s southern coast and the state’s popular razor-clam season.At the same time, two other types of toxins rarely seen in combination are turning up in shellfish in Puget Sound and along the Washington coast, said Vera Trainer, manager of the Marine Microbes and Toxins Programs at the Northwest Fisheries Science Center in Seattle.“The fact that we’re seeing multiple toxins at the same time, we’re seeing high levels of domoic acid, and we’re seeing a coastwide bloom — those are indications that this is unprecedented,” Trainer said.Scientists suspect this year’s unseasonably high temperatures are playing a role, along with “the blob” — a vast pool of unusually warm water that blossomed in the northeastern Pacific late last year. The blob has morphed since then, but offshore waters are still about two degrees warmer than normal, said University of Washington climate scientist Nick Bond, who coined the blob nickname.“This is perfect plankton-growing weather,” said Dan Ayres, coastal shellfish manager for the Washington Department of Fish and Wildlife.Domoic-acid outbreaks aren’t unusual in the fall, particularly in razor clams, Ayres said. But the toxin has never hit so hard in the spring, or required such widespread closures for crabs.“This is new territory for us,” Ayres said. “We’ve never had to close essentially half our coast.”Heat is not the only factor spurring the proliferation of the marine algae that produce the toxins, Trainer said. They also need a rich supply of nutrients, along with the right currents to carry them close to shore.Scientists onboard the NOAA research vessel Bell M. Shimada will collect water and algae samples, measure water temperatures and also test fish like sardines and anchovies that feed on plankton. The algae studies are being integrated with the ship’s prime mission, which is to assess West Coast sardine and hake populations.The ship will sample from the Mexican border to Vancouver Island in four separate legs.“By collecting data over the full West Coast with one ship, we will have a much better idea of where the bloom is, what is causing it, and why this year,” University of California, Santa Cruz ocean scientist Raphael Kudela said in an email.He and his colleagues found domoic-acid concentrations in California anchovies this year as high as any ever measured. “We haven’t seen a bloom that is this toxic in 15 years,” he wrote. “This is possibly the largest event spatially that we’ve ever recorded.”On Washington’s Long Beach Peninsula, Ayres recently spotted a sea lion wracked by seizures typical of domoic-acid poisoning. The animal arched its neck repeatedly, then collapsed into a fetal position and quivered. “Clearly something neurological was going on,” he said.Wildlife officials euthanized the creature and collected fecal samples that confirmed it had eaten prey — probably small fish — that in turn had fed on the toxic algae.Ayres’ crews collect water and shellfish samples from around the state, many of which are analyzed at the Washington Department of Health laboratory in Seattle. DOH also tests commercially harvested shellfish, so consumers can be confident that anything they buy in a market is safe to eat, said Jerry Borchert, the state’s marine biotoxin coordinator.But for recreational shellfish fans, the situation has been fraught this year even inside Puget Sound.“It all really started early this year,” Borchert said.Domoic-acid contamination is rare in Puget Sound, but several beds have been closed this year because of the presence of the toxin that causes paralytic shellfish poisoning (PSP) and a relatively new threat called diarrhetic shellfish poisoning (DSP). The first confirmed case of DSP poisoning in the United States occurred in 2011 in a family that ate mussels from Sequim Bay on the Olympic Peninsula, Borchert said.But 2015 is the first time regulators have detected dangerous levels of PSP, DSP and domoic acid in the state at the same time — and in some cases, in the same places, he said. “This has been a really bad year overall for biotoxins.”Over the past decade, Trainer and her colleagues have been working on models to help forecast biotoxin outbreaks in the same way meteorologists forecast long-term weather patterns, like El Niño. They’re also trying to figure out whether future climate change is likely to bring more frequent problems.At a recent conference in Sweden on that very question, everyone agreed that “climate change, including warmer temperatures, changes in wind patterns, ocean acidification, and other factors will influence harmful algal blooms,” Kudela wrote. “But we also agreed we don’t really have the data yet to test those hypotheses.”On past research voyages, Trainer and her team discovered offshore hot spots that seem to be the initiation points for outbreaks. There’s one in the so-called Juan de Fuca Eddy, where the California current collides with currents flowing from the Strait of Juan de Fuca. Another is Heceta Bank, a shallow, productive fishing ground off the Oregon coast, where nutrient-rich water wells up from the deep.“These hot spots are sort of like crockpots, where the algal cells can grow and get nutrients and just stew,” Trainer said.Scientists have also unraveled the way currents can sweep algae from the crockpots to the shore. “But what we still don’t know is why are these hot spots hotter in certain years than others,” Trainer said. “Our goal is to try to put this story together once we have data from the cruises.”

Read the original post: http://www.seattletimes.com

New study shows Arctic Ocean rapidly becoming more corrosive to marine species

Chukchi and Beaufort Seas could become less hospitable to shelled animals by 2030

New research by NOAA, University of Alaska, and Woods Hole Oceanographic Institution in the journal Oceanography shows that surface waters of the Chukchi and Beaufort seas could reach levels of acidity that threaten the ability of animals to build and maintain their shells by 2030, with the Bering Sea reaching this level of acidity by 2044.“Our research shows that within 15 years, the chemistry of these waters may no longer be saturated with enough calcium carbonate for a number of animals from tiny sea snails to Alaska King crabs to construct and maintain their shells at certain times of the year,” said Jeremy Mathis, an oceanographer at NOAA’s Pacific Marine Environmental Laboratory and lead author. “This change due to ocean acidification would not only affect shell-building animals but could ripple through the marine ecosystem.”A team of scientists led by Mathis and Jessica Cross from the University of Alaska Fairbanks collected observations on water temperature, salinity and dissolved carbon during two month-long expeditions to the Bering, Chukchi and Beaufort Seas onboard United States Coast Guard cutter Healy in 2011 and 2012.These data were used to validate a predictive model for the region that calculates the change over time in the amount of calcium and carbonate ions dissolved in seawater, an important indicator of ocean acidification. The model suggests these levels will drop below the current range in 2025 for the Beaufort Sea, 2027 for the Chukchi Sea and 2044 for the Bering Sea. “A key advance of this study was combining the power of field observations with numerical models to better predict the future,” said Scott Doney, a coauthor of the study and a senior scientist at the Woods Hole Oceanographic Institution.A form of calcium carbonate in the ocean, called aragonite, is used by animals to construct and maintain shells. When calcium and carbonate ion concentrations slip below tolerable levels, aragonite shells can begin to dissolve, particularly at early life stages. As the water chemistry slips below the present-day range, which varies by season, shell-building organisms and the fish that depend on these species for food can be affected.This region is home to some of our nation’s most valuable commercial and subsistence fisheries. NOAA’s latest Fisheries of the United States report estimates that nearly 60 percent of U.S. commercial fisheries landings by weight are harvested in Alaska. These 5.8 billion pounds brought in $1.9 billion in wholesale values or one third of all landings by value in the U.S. in 2013.The continental shelves of the Bering, Chukchi and Beaufort Seas are especially vulnerable to the effects of ocean acidification because the absorption of human-caused carbon dioxide emissions is not the only process contributing to acidity. Melting glaciers, upwelling of carbon-dioxide rich deep waters, freshwater input from rivers and the fact that cold water absorbs more carbon dioxide than warmer waters exacerbates ocean acidification in this region.“The Pacific-Arctic region, because of its vulnerability to ocean acidification, gives us an early glimpse of how the global ocean will respond to increased human-caused carbon dioxide emissions, which are being absorbed by our ocean,” said Mathis. “Increasing our observations in this area will help us develop the environmental information needed by policy makers and industry to address the growing challenges of ocean acidification.”

The crew lowers sensors that measure water temperature, salinity and dissolved carbon in the Arctic Ocean. (Mathis/NOAA)

Read the original post: http://research.noaa.gov

4 Questions with David Battisti on El Niño and Climate Variability

This year’s spring Houghton Lecturer is David Battisti, a professor of atmospheric sciences and the Tamaki Endowed Chair at the University of Washington. As the scientist-in-residence within MIT’s Program of Atmospheres, Oceans, and Climate (PAOC), Battisti has spent the semester giving a series of talks on natural variability in the climate system. Some of his main research interests include illuminating the processes that underlay past and present climates, understanding how interactions between the ocean, atmosphere, land, and sea ice lead to climate variability on different timescales, and improving El Niño models and their forecast skill—something that is becoming increasingly relevant in a warming world.

Credit: National Oceanic and Atmospheric Administration

Credit: National Oceanic and Atmospheric Administration

The Pacific Ocean is primed for a powerful double El Niño—a rare phenomenon in which there are two consecutive years of episodic warming of sea surface temperatures—according to some scientists. It’s been a few years since the Pacific Ocean experienced one strong warming event, let alone an event that spanned two consecutive years. A double El Niño could have large ripple effects in weather systems around the globe, from summer monsoons and hurricanes to winter storms in the Northern Hemisphere. Meanwhile, some scientists think it may signal the beginning of the end of the warming hiatus. Oceans at MIT asked Battisti about this phenomenon and what it does and doesn’t tell us about climate change.How rare are double El Niños and what are the expected effects?[Double El Niños] are not unheard of, but the last time it stayed warm for nearly two full years was back in the early 80s. In the tropics, climate anomalies associated with a typical El Niño event will persists as long as the event persists. For example, El Niño warm and cold events explain the lion’s share of the variance in monsoon onset date: conditions in late boreal summer causes a delay in the onset of the monsoon in Indonesia, which greatly reduces the annual production of the country’s staple food, rice. If El Niño conditions persist for two years spanning the onset time for the Indonesia monsoon, monsoon onset will very likely be delayed for two consecutive years.In the mid-latitudes of the Northern Hemisphere, where we live, El Niño affects the climate by issuing persistent, large scale atmospheric waves from the tropical Pacific to the North Pacific and over most of North America. These waves are most efficient at reaching the mid-latitudes during our wintertime. If El Niño conditions span two consecutive northern hemisphere winters, we should expect the winter climate in these regions to be affected similarly over two consecutive winters.During an El Niño event, there is a greater than normal chance for an unusually warm winter in the Pacific Northwest and in the north central US, and for a colder and wetter than normal winter in southern Florida. Alternately, El Niño has little impact on winter weather in New England. It also greatly affects precipitation in Southern California and the southwestern US — El Niño years are reliably wetter than normal, but just how much wetter than normal is very unpredictable.Why haven’t we seen a strong El Niño in nearly two decades?A large El Niño event is characterized by exceptionally warm conditions in the tropical Pacific, or by very warm conditions that persist for 18 months or so – about nine months longer than normal. It’s been over 20 years since we’ve seen a very large warm event, but it is not known how frequently very strong and exceptionally long events happen.We categorize El Niño events (and their cold event siblings) by measuring sea surface temperature and zonal surface wind stress along the equator in the tropical Pacific. Good data to construct these indices extend back to the early 20th Century. Unfortunately, we can’t answer this question by examining the behavior of the high-end climate models because about only two high-end climate models in the world feature El Niño warm and cold events that are consistent with observations. However, the observational record shows three El Niño events with exceptionally large amplitude that were exceptionally long lived since 1950s, so a 20-year gap since the last large warm event is not surprising.What does the hiatus refer to, and is it related to the El Niño phenomenon? The whole hiatus idea is based on the expectation that as carbon dioxide increases, so to should the global average temperature. And indeed, the global averaged temperature has increased over the course of the 20th Century by approximately 0.85 degrees Celsius. And climate models support that the primary reason the 20th Century increase is rising concentrations of greenhouse gases associated with human activity. However, over the past dozen years or so, the global average temperature has not increased – hence the moniker ‘the hiatus’.

The decade long hiatus isn’t inconsistent from what we would expect from natural variability and human forced climate change. For example, a typical El Niño cycle features a very warm year, followed by a moderately cold year, and then nothing happens for a while. Somewhere between three and seven years later there’s another warm event followed by a cold event, but the duration between these events is quite random. Selecting any single period—for example, the last 10 years—we would expect decades in which the global average temperature fluctuates by 0.15 degrees Celsius or so due to the randomness in natural climate variability. The regional patterns of temperature change and the hiatus in global average temperature over the past decade aren’t distinguishable from a superposition of the cold phase of natural variability with the expected warming due to human activity.Is this a sign that the warming hiatus is coming to an end?El Niño does increase the global average temperature so we will see the average global temperature spike a bit this year compared to the last few years, which will bring us back up toward what the models say is the forced warming response. But El Niño events are not predictable more than a year or so in advance, so it is not possible to say what will happen over the next few years, or even the next decade.On the other hand, if you view the change in global average temperature over the past thirty years as being a superposition of a steady increase due to human-induced forcing and decade-long periods of warm (the 1980’s and 1990’s) and cold (the 2002-2013) anomalies due to natural variability including El Niño, then decade-long periods of very large warming and very weak warming or even weak cooling should be expected. Exactly when these periods end is only obvious in retrospect.For 25 years, Henry Houghton served as Head of the Department of Meteorology—today known as PAOC. During his tenure, the department established an unsurpassed standard of excellence in these fields. The Houghton Fund was established to continue that legacy through support of students and the Houghton Lecture Series. Since its 1995 inception, more than two dozen scientists from around the world representing a wide range of disciplines within the fields of Atmosphere, Ocean and Climate have visited and shared their expertise with the MIT community.

Originally posted: http://oceans.mit.edu

'Substantial' El Nino event predicted

The El Nino effect, which can drive droughts and flooding, is under way in the tropical Pacific, say scientists.

Australia's Bureau of Meteorology predicted that it could become a "substantial" event later in the year.The phenomenon arises from variations in ocean temperatures.The El Nino is still in its early stages, but has the potential to cause extreme weather around the world, according to forecasters.US scientists announced in April that El Nino had arrived, but it was described then as "weak".Australian scientists said models suggested it could strengthen from September onwards, but it was too early to determine with confidence how strong it could be."This is a proper El Nino effect, it's not a weak one," David Jones, manager of climate monitoring and prediction at the Bureau of Meteorology, told reporters."You know, there's always a little bit of doubt when it comes to intensity forecasts, but across the models as a whole we'd suggest that this will be quite a substantial El Nino event."

Aftermath of flooding in California put down to El Nino

Aftermath of flooding in California put down to El Nino

An El Nino comes along about every two to seven years as part of a natural cycle.

Every El Nino is different, and once one has started, models can predict how it might develop over the next six to nine months, with a reasonable level of accuracy.

How can we predict El Nino?

In the tropical Pacific Ocean, scientists operate a network of buoys that measure temperature, currents and winds. The data - and other information from satellites and meteorological observations - is fed into complex computer models designed to predict an El Nino. However, the models cannot predict the precise intensity or duration of an El Nino, or the areas likely to be affected, more than a few months ahead. Researchers are trying to improve their models and observational work to give more advance notice.

A strong El Nino five years ago was linked with poor monsoons in Southeast Asia, droughts in southern Australia, the Philippines and Ecuador, blizzards in the US, heatwaves in Brazil and extreme flooding in Mexico.

Another strong El Nino event was expected during last year's record-breaking temperatures, but failed to materialise.Prof Eric Guilyardi of the Department of Meteorology at the University of Reading said it would become clear in the summer whether this year might be different."The likelihood of El Nino is high but its eventual strength in the winter when it has its major impacts worldwide is still unknown," he said."We will know in the summer how strong it is going to be."

Weather patterns

The El Nino is a warming of the Pacific Ocean as part of a complex cycle linking atmosphere and ocean.The phenomenon is known to disrupt weather patterns around the world, and can bring wetter winters to the southwest US and droughts to northern Australia.The consequences of El Nino are much less clear for Europe and the UK.Research suggests that extreme El Nino events will become more likely as global temperatures rise.

Originally posted at: www.bbc.com

Why Poop-Eating Vampire Squid Make Patient Parents

The mysterious vampire squid is not actually a vampire or a squid–it’s an evolutionary relict that feeds on detritus. (MBARI)

The mysterious vampire squid is not actually a vampire or a squid–it’s an evolutionary relict that feeds on detritus. (MBARI)

Squid and octopuses are famous for their “live fast, die young” strategy. At one-year-old or younger, they spawn masses of eggs and die immediately. But scientists have just discovered a striking exception, reported April 20 in the journal Current Biology.Females of the bizarre species known as “vampire squid” can reproduce dozens of times and live up to eight years. This strategy is probably related to the vampire squid’s slow metabolism and its habit of eating poop.These shoebox-sized animals have fascinated biologists since their discovery in 1903, not because of any actual vampiric habits, but because of their puzzling place within the cephalopods—the group of animals that contains squids and octopuses.Vampire squid are neither a squid nor an octopus, and they’re tricky to study because they live hundreds of meters below the surface, in frigid water with very little oxygen.In addition to eight webbed arms, they have two strange thread-like filaments, whose purpose—collecting waste for the vampire squid to eat—wasn’t understood until 2012. A clear picture of the habits and evolution of these animals remains elusive.Take a Rest Between EggsHenk-Jan Hoving, currently at the Helmholtz Centre for Ocean Research in Kiel, Germany, began his investigation of vampire squid while at the Monterey Bay Aquarium Research Institute. For the spawning study, he worked with specimens that had been collected by net off southern California and stored in jars at the Santa Barbara Museum of Natural History.Out of 27 adult females, Hoving and his colleagues found that 20 had “resting ovaries” without any ripe or developing eggs inside. However, all had proof of previous spawning.As in humans, developing eggs are surrounded by a group of cells called a follicle. After a mature egg is released, the follicle is slowly resorbed by the ovary. The resorption process in vampire squid is so slow, in fact, that the scientists could read each animal’s reproductive history in its ovaries.Counting 38 to 100 separate spawning events in the most advanced female, and estimating that at least a month elapsed between each event, Hoving and his co-authors concluded that adult female vampire squid spend three to eight years alternately spawning and resting.This length of time is reminiscent of the deep-sea octopus who brooded her eggs for over four years. In both cases, the animals’ actual lifespan must be longer than their reproductive period, which suggests truly venerable ages for members of a group whose most common representatives live for just a few months. These long life spans are related to a slow metabolism and the chill of the deep sea—around 2 to 7 degrees Celsius, or 35 to 44 Fahrenheit.Limited Calories, But Limited DangerA single spawning event is not actually a strict rule for octopuses and squid. A few species are known to spawn multiple batches of eggs, even as they continue to eat and grow. However, all species reach a continuous spawning phase at the end of their lives.Once a female starts to lay, her body is in egg-production mode until she dies, her ovaries constantly producing. That’s why the discovery of a “resting phase” in the ovaries of vampire squid was so surprising.But this unexpected strategy makes sense in the context of a vampire squid’s lifestyle. The mass spawnings of other cephalopods are fueled by a carnivorous diet of fish, crabs, shrimp and even fellow squids and octopuses.By contrast, the fecal material and mucus that make up most vampire squid meals are not nearly as calorie-rich. The animals may be simply unable to muster enough energy to ripen all their eggs at once.There’s an advantage, however, to living in the food-poor, oxygen-poor depths of the ocean. Few large predators can survive there for long, so vampire squid are relatively safe—compared to their cousins, who are constantly on the run from fish, dolphins, whales, seabirds and each other.When you face a high risk of being eaten on any given day, it’s a good idea to get all your eggs out as quickly as possible. But vampire squid are free to engage in leisurely, repetitive spawning. It’s the ultimate work-life balance: alternately popping out babies, then returning to business as usual.

The vampire squid was named for its fearsome appearance, but those “spines” are just soft flaps of skin. (MBARI)

The vampire squid was named for its fearsome appearance, but those “spines” are just soft flaps of skin. (MBARI)

A fossil cephalopod from the Middle Jurassic, thought to be an early vampire squid.

A fossil cephalopod from the Middle Jurassic, thought to be an early vampire squid.

Read the original post: http://blogs.kqed.org/

Ray Hilborn: Analysis Shows California Sardine Decline Not Caused by Too High Harvest Rate

Posted with permission from SEAFOODNEWS — Please do not repost without permission.

SEAFOODNEWS.COM [SeafoodNews] (Commentary) by Ray Hilborn April 22, 2015

Two items in the last weeks fisheries news have again caused a lot of media and NGO interest forage fish. First was publication in the Proceedings of the National Academy of Sciences of a paper entitled “Fishing amplifies forage fish population collapses” and the second was the closure of the fishery for California sardine. Oceana in particular argued that overfishing is part of the cause of the sardine decline and the take home message from the PNAS paper seems to support this because it showed that in the years preceding a “collapse” fishing pressure was unusually high.

However what the PNAS paper failed to highlight was the real cause of forage fish declines. Forage fish abundance is driven primarily by the birth and survival of juvenile fish producing what is called “recruitment”. Forage fish declines are almost always caused by declines in recruitment, declines that often happen when stocks are large and fishing pressure low. The typical scenario for a stock collapse is (1) recruitment declines at a time of high abundance, (2) abundance then begins to decline as fewer young fish enters the population, (3) the catch declines more slowly than abundance so the harvest rate increases, and then (4) the population reaches a critical level that was called “collapsed” in the PNAS paper.

Looking back at the years preceding collapse it appears that the collapse was caused by high fishing pressure, when in reality it was caused by a natural decline in recruitment that occurred several years earlier and was not caused by fishing.

The decline of California sardines did not follow this pattern, because the harvest control rule has reduced harvest as the stock declined, and as fisheries management practices have improved this is now standard practice. The average harvest rate for California sardines has only been 10% per year for the last 10 years, compared to a natural mortality rate of over 30% per year. Even if there had been no fishing the decline in California sardine would have been almost exactly the same.

In many historical forage fish declines fishing pressure was much higher, often well over 50% of the population was taken each year and as the PNAS paper highlighted, this kind of fishing pressure does amplify the decline. However many fisheries agencies have learned from this experience and not only keep fishing pressure much lower than in the past, but reduce it more rapidly when recruitment declines.

So the lesson from the most recent decline of California sardine is we have to adapt to the natural fluctuations that nature provides. Yes, sea lions and birds will suffer when their food declines, but this has been happening for thousands of years long before industrial fishing. With good fisheries management as is now practiced in the U.S. and elsewhere forage fish declines will not be caused by fishing.

Ray Hilborn is a Professor in the School of Aquatic and Fishery Sciences, University of Washington specializing in natural resource management and conservation. He is one of the most respected experts on marine fishery population dynamics in the world.

Subscribe to SEAFOODNEWS.COM to read the original post.